One of the more interesting e-commerce features I’ve seen recently is the ability to search for products that look like an image I have on my phone. For example, I can take a picture of a pair of shoes or other product then search a product catalog to find similar items. Getting started with a feature like this can be a fairly straight forward project the right tools. And if we can frame a problem as a vector search problem, then we can use Postgres to solve it!

In this blog we’ll build a basic image search engine using Postgres. We’ll use a pre-trained model to generate embeddings for images and text, then store those embeddings in Postgres. The pgvector extension will enable us to conduct similarity searches on these embeddings using both images and raw-text as queries.

Image Search with CLIP and Postgres

In 2021, OpenAI published a paper and model weights for CLIP (Contrastive Language-Image Pre-Training), a model trained to predict the most relevant text snipped given an image. With some clever implementation, this model can also be used as the backbone for a search engine that accepts images and text as the input queries. We can transform images into vectors (embeddings), store the image’s embeddings in Postgres, use extensions to conduct similarity searches on these vectors, and use this to build an image search engine on top of Postgres. There are many open source variants of CLIP models available on Hugging Face but we will use OpenAI’s clip-vit-base-patch32 mode for this demonstration.

In previous blogs, we’ve written about generating embeddings for semantic text search. Some of those principles also apply here. We’ll generate embeddings for our repository of data which in this case is a directory of images. We will then store those embeddings in Postgres. When we query the data, we need to use the same model to generate embeddings for the query. The difference is that in this case, our model will generate embeddings for both text and images.

For this example, we’ll use one of OpenAI’s open source CLIP models available on Hugging Face. Note, the stated limitations to the use of CLIP for production use. It is incredibly convenient to work with these models since their interfaces are available in the transformers Python library.

Setup

All the code in this blog is available in a notebook in the Tembo Github repository. We’ll run through some snippets of that notebook code in this blog, but the easiest way to follow along is to run Postgres and the accompanying Jupyter notebook on your machine.

git clone https://github.com/tembo-io/tembo-labs.git

cd examples/image-searchRefer to the setup instructions in the project README and follow the notebook.

Loading Postgres with Image Embeddings

First we need to acquire the raw images. We are using an Amazon Products dataset from Kaggle. The dataset contains urls to the images for each of the example products, so we’ll download the images and store them in a directory.

We are going to store the image files locally for this example, but in a production system you might store them in a cloud storage service like S3.

import pandas as pd

df = pd.read_csv("data/amazon_product.csv")

for i, row in df.iterrows():

url = row["product_photo"]

asin = row["asin"]

response = requests.get(url)

img = Image.open(BytesIO(response.content))

if img.mode == 'RGBA':

img = img.convert('RGB')

img.save(f"./data/{asin}.jpg")Next we need to generate embeddings for the images we acquired. We’ll setup a table in Postgres to store the embeddings.

CREATE TABLE IF NOT EXISTS image_embeddings (

image_path TEXT PRIMARY KEY,

embeddings VECTOR(512)

);We’ll use the CLIP model to generate embeddings for each image, and save them into a Postgres table. And create a few helper functions for loading our images, generating embeddings, and getting them inserted into Postgres.

from pydantic import BaseModel

from transformers import (

CLIPImageProcessor,

CLIPModel,

)

MODEL = "openai/clip-vit-base-patch32"

image_processor = CLIPImageProcessor.from_pretrained(MODEL)

image_model = CLIPModel.from_pretrained(MODEL)

class ImageEmbedding(BaseModel):

image_path: str

embeddings: list[float]

def get_image_embeddings(

image_paths: list[str], normalize=True

) -> list[ImageEmbedding]:

# Process image and generate embeddings

images = []

for path in image_paths:

images.append(Image.open(path))

inputs = image_processor(images=images, return_tensors="pt")

with torch.no_grad():

outputs = image_model.get_image_features(**inputs)

image_embeddings: list[ImageEmbedding] = []

for image_p, embedding in zip(image_paths, outputs):

if normalize:

embeds = F.normalize(embedding, p=2, dim=-1)

else:

embeds = embedding

image_embeddings.append(

ImageEmbedding(

image_path=image_p,

embeddings=embeds.tolist(),

)

)

return image_embeddings

def list_jpg_files(directory: str) -> list[str]:

# List to hold the full paths of files

full_paths = []

# Loop through the directory

for filename in os.listdir(directory):

# Check if the file ends with .jpg

if filename.endswith(".jpg"):

# Construct full path and add it to the list

full_paths.append(os.path.join(directory, filename))

return full_paths

def pg_insert_embeddings(images: list[ImageEmbedding]):

init_pg_vector = "CREATE EXTENSION IF NOT EXISTS vector;"

init_table = """

CREATE TABLE IF NOT EXISTS image_embeddings (image_path TEXT PRIMARY KEY, embeddings VECTOR(512));

"""

insert_query = """

INSERT INTO image_embeddings (image_path, embeddings)

VALUES (%s, %s)

ON CONFLICT (image_path)

DO UPDATE SET embeddings = EXCLUDED.embeddings

;

"""

with psycopg.connect(DATABASE_URL) as conn:

with conn.cursor() as cur:

cur.execute(init_pg_vector)

cur.execute(init_table)

for image in images:

cur.execute(insert_query, (image.image_path, image.embeddings))Our helper functions are so let’s execute them sequentially.

# get the paths to all our jpg images

images = list_jpg_files("./images")

# generate embeddings

image_embeddings = get_image_embeddings(images)

# insert them into Postgres

pg_insert_embeddings(image_embeddings)Quickly verify that the embeddings were inserted into Postgres. We should see

psql postgres://postgres:postgres@localhost:5433/postgres

\x

select image_path, embeddings from image_embeddings limit 1;image_path | ./data/B086QB7WZ1.jpg

embeddings | [0.01544646,0.062326625,-0.03682831,0 ...Searching for Similar Images With pgvector

Now that we have a function to generate embeddings for text we can use those embeddings in a vector similarity search query. pgvector supports several distance operators, but we’ll use cosine similarity for this example. The embeddings that we are searching are stored in Postgres, so we can use SQL to do a cosine similarity search (1 - cosine similarity) and find the images that have embeddings that are most similar to the embeddings of our text query.

def similarity_search(txt_embedding: list[float]) -> list[tuple[str, float]]:

with psycopg.connect(DATABASE_URL) as conn:

with conn.cursor() as cur:

cur.execute(

"""

SELECT

image_path,

1 - (embeddings <=> %s::vector) AS similarity_score

FROM image_embeddings

ORDER BY similarity_score DESC

LIMIT 2;

""",

(txt_embedding,),

)

rows = cur.fetchall()

return [(row[0], row[1]) for row in rows]Similar to conducting vector search on data with raw-text, we will use embeddings to search for similar images.

Let’s grab an image of Cher, we can use the image from her Wikipedia page.

Save it to ./cher_wikipedia.jpg.

Now we can pass that single image into our get_image_embeddings() function and then search for similar images using `similarity_search().

search_embeddings = get_image_embeddings(["./cher_wikipedia.jpg"])[0].embeddings

results = similarity_search(search_embeddings)

for image_path, score in results[:2]:

print((image_path, score))('B0DBQY1PKS.jpg', 0.5851975926639095)

('B0DBR4KDRF.jpg', 0.5125825695644287)Products B0DBQY1PKS and B0DBR4KDRF, (Cher’s Forever album) which are were the top two products most similar to our image of Cher.

Querying for Images with Raw Text

Searching for images that are similar to one another can be very helpful when searching for products. However, sometimes people will want to search for images given a text string. For example, Google has for a long time had the ability to search for images of cats.

from transformers import (

CLIPTokenizerFast,

CLIPTextModel,

CLIPImageProcessor

)

MODEL = "openai/clip-vit-base-patch32"

processor = CLIPProcessor.from_pretrained(MODEL)

clip_model = CLIPModel.from_pretrained(MODEL)

def get_text_embeddings(text: str) -> list[float]:

inputs = processor(text=[text], return_tensors="pt", padding=True)

text_features = clip_model.get_text_features(**inputs)

text_embedding = text_features[0].detach().numpy()

embeds = text_embedding / np.linalg.norm(text_embedding)

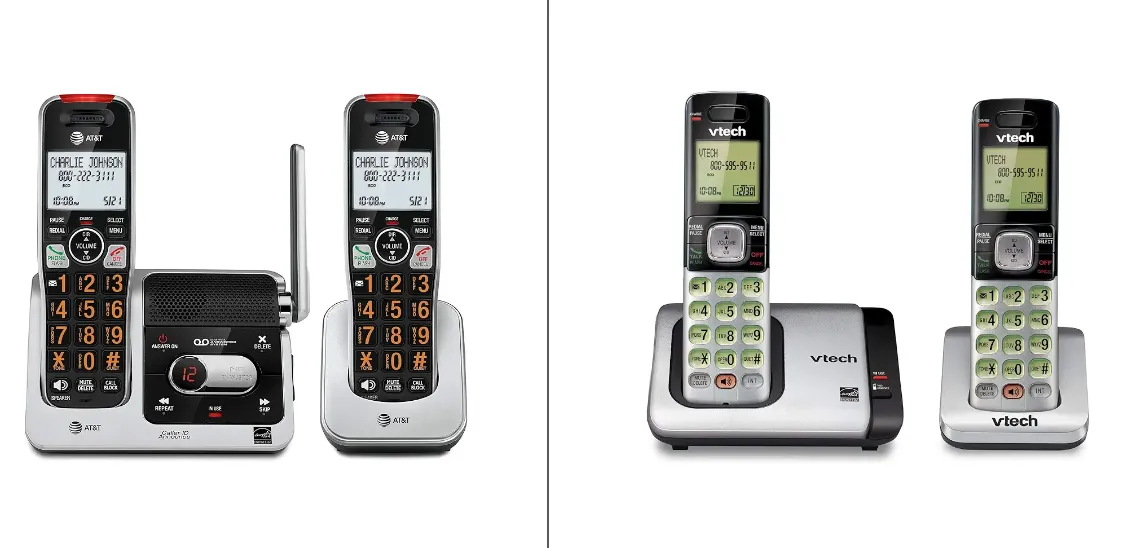

return embeds.tolist()Finally, we can use these functions to generate the embeddings and then search our images with a raw text query. We’ll search our product catalog for images of “telephones”.

text_embeddings = get_text_embeddings("telephones")

results: list[tuple[str, float]] = similarity_search(search_embeddings)

for image_path, score in results[:2]:

print((image_path, score))('./data/B086QB7WZ1.jpg', 0.26320752344041964)

('./data/B00FRSYS12.jpg', 0.2626421138474824)Products B086QB7WZ1 and B00FRSYS12 are the top two most similar images to the text query “telephones”.

Multi-Modal Search on Postgres

We’ve shown conceptually how to build a multi-modal search engine on Postgres.

As a reminder, of the code in this blog is available in Tembo Github repository.

We used the CLIP model to generate embeddings for both images and text, then stored those embeddings in Postgres.

We used the pgvector extension to conduct similarity searches on these embeddings.

This is a powerful tool for building search engines that can accept both text and image queries.

Follow the Tembo blog learn more about use cases for vector search on Postgres.

Additional Reading

If you are interested in this topic, check out the geoMusings blog on image similarity with pgvector. Also read A Simple Framework for Contrastive Learning of Visual Representations, ICML2020, Ting ChenSimon Kornblith, Mohammad Norouzi, Geoffrey E. Hinton.